Serverless computing, also known as function as a service (FaaS), is a cloud computing model that abstracts the complexity of managing servers away from developers. In this paradigm, developers can focus solely on writing and deploying code, without concerning themselves with provisioning, scaling, or managing the underlying infrastructure. Serverless computing enables developers to build and deploy applications more efficiently, reducing time to market and operational overhead.

Exploring the Essence of Serverless Computing

At its core, serverless computing revolves around the concept of executing code in stateless compute containers that are triggered by events. These events can range from HTTP requests and database modifications to file uploads and time-based schedules. When an event occurs, the cloud provider automatically provisions the necessary resources to execute the associated code, ensuring scalability and cost-effectiveness.

Delving into Key Features

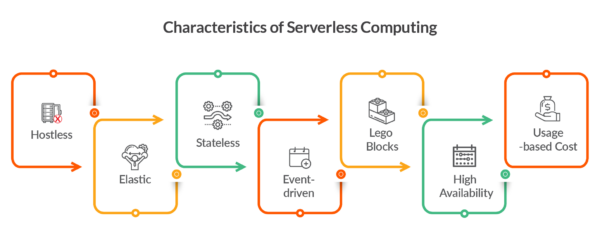

Key features of serverless computing include:

- Automatic Scaling: Serverless platforms automatically scale resources up or down based on demand, ensuring optimal performance and cost-efficiency.

- Pay-per-Use Pricing: With serverless computing, users only pay for the resources consumed during code execution, leading to cost savings compared to traditional provisioning models.

- Event-driven Architecture: Serverless applications are built around event-driven architecture, allowing for seamless integration with various services and enabling reactive, scalable solutions.

Types of Serverless Computing

Serverless computing encompasses various types and implementations, including:

| Type | Description |

|---|---|

| Function as a Service (FaaS) | Allows developers to deploy individual functions or code snippets without managing the underlying infrastructure. Popular FaaS platforms include AWS Lambda, Azure Functions, and Google Cloud Functions. |

| Backend as a Service (BaaS) | Provides pre-built backend services such as authentication, databases, and file storage, enabling developers to focus on building frontend applications. Examples include Firebase and AWS Amplify. |

Utilizing Serverless Computing

Ways to leverage serverless computing include:

- Microservices Architecture: Break down applications into smaller, independent functions or services, making it easier to develop, deploy, and maintain complex systems.

- Real-time Data Processing: Process and analyze real-time data streams from IoT devices, sensors, or user interactions, enabling instant insights and actions.

- Scheduled Tasks and Cron Jobs: Execute recurring tasks such as data backups, report generation, and cleanup operations on a predefined schedule.

Addressing Challenges and Solutions

Challenges associated with serverless computing include:

- Cold Start Latency: The initial latency experienced when a serverless function is invoked for the first time due to the need to provision resources. Solutions include optimizing function packaging and leveraging provisioned concurrency.

- Vendor Lock-In: Dependency on a specific cloud provider’s serverless platform may limit portability and flexibility. Mitigation strategies involve adopting multi-cloud or hybrid cloud architectures and adhering to industry standards.

Characteristics and Comparisons

Comparison of serverless computing with other computing models:

| Characteristic | Serverless Computing | Traditional Computing |

|---|---|---|

| Infrastructure Management | Abstracted away from developers | Requires manual provisioning and management of servers |

| Scalability | Automatic scaling based on demand | Manual scaling with fixed capacity |

| Cost Structure | Pay-per-use pricing model | Upfront investment in infrastructure |

| Development Focus | Code-centric approach | Infrastructure-centric approach |

Future Perspectives and Technologies

Future developments in serverless computing include:

- Edge Computing Integration: Extending serverless capabilities to the edge of the network, enabling low-latency processing for IoT, mobile, and edge devices.

- Hybrid and Multi-cloud Support: Enhancing portability and flexibility by facilitating seamless deployment across multiple cloud environments.

- Containerization: Integration with container orchestration platforms such as Kubernetes to provide greater control and portability for serverless workloads.

Serverless Computing and VPN Integration

The integration of serverless computing with VPN services opens up possibilities for enhancing security, scalability, and flexibility in network infrastructure. Potential use cases include:

- Secure Function Execution: Running serverless functions within a VPN environment to ensure data privacy and protection against unauthorized access.

- Dynamic VPN Scaling: Automatically scaling VPN resources in response to demand fluctuations, ensuring optimal performance and cost-efficiency.

- Custom VPN Solutions: Developing custom VPN solutions using serverless platforms to meet specific business requirements, such as geo-restricted access or content filtering.

Resources for Further Exploration

For more information on serverless computing, consider exploring the following resources:

By embracing serverless computing, organizations can streamline development processes, improve resource utilization, and unlock new possibilities for innovation in the digital era.